Implementing Generative AI with Speed and Safety: Insights from McKinsey

According to McKinsey research, Generative AI has the potential to add up to $4.4 trillion in economic value to the global economy. This estimate would roughly double if we include the impact of embedding generative AI into software that is currently used for other tasks beyond those use cases.

The promise of Generative AI is immense, but so are the risks. Companies should navigate these waters carefully to harness the full potential of this powerful tool while mitigating potential downsides. Insights from McKinsey’s State of AI in early 2024 and Implementing generative AI with speed and safety shed light on a strategic approach to implementing generative AI responsibly.

The Strategic Approach to Mitigating Risks

To move quickly and responsibly with generative AI, companies need a strategic approach that balances risk management with innovation. Here is a roadmap to guide organizations, inspired by McKinsey’s recommendations:

1. Understand Inbound Exposures

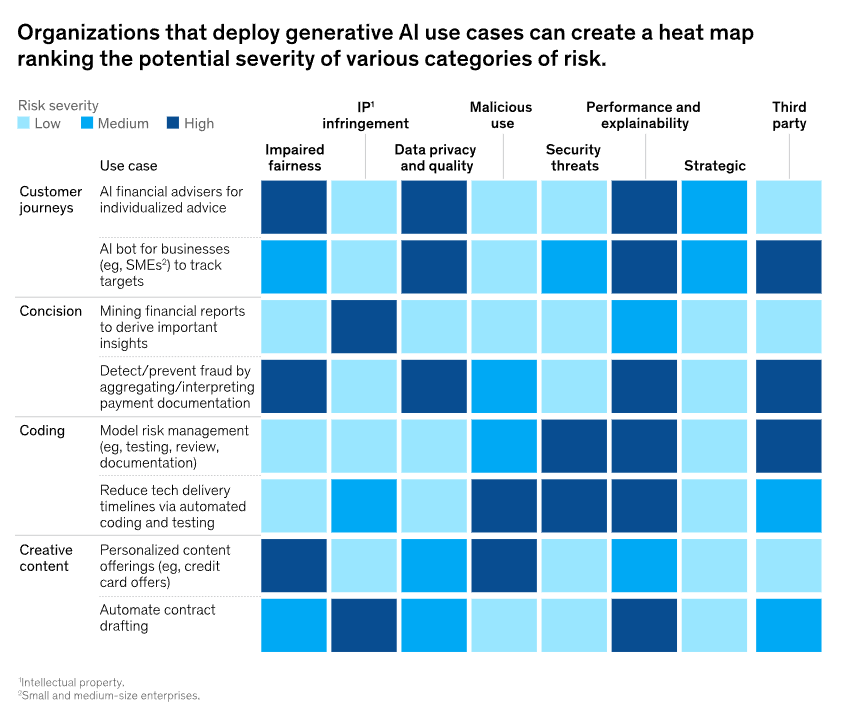

Organizations should launch a sprint to identify potential inbound risks associated with generative AI. This includes assessing security threats from AI-enabled malware, third-party risks, malicious use such as deepfakes, and intellectual property infringement.

Source: McKinsey,2024

2. Develop a Comprehensive Risk View

It is crucial to build a thorough understanding of the materiality of generative AI-related risks across various domains. Companies should create a range of options, both technical and non-technical, to manage these risks effectively.

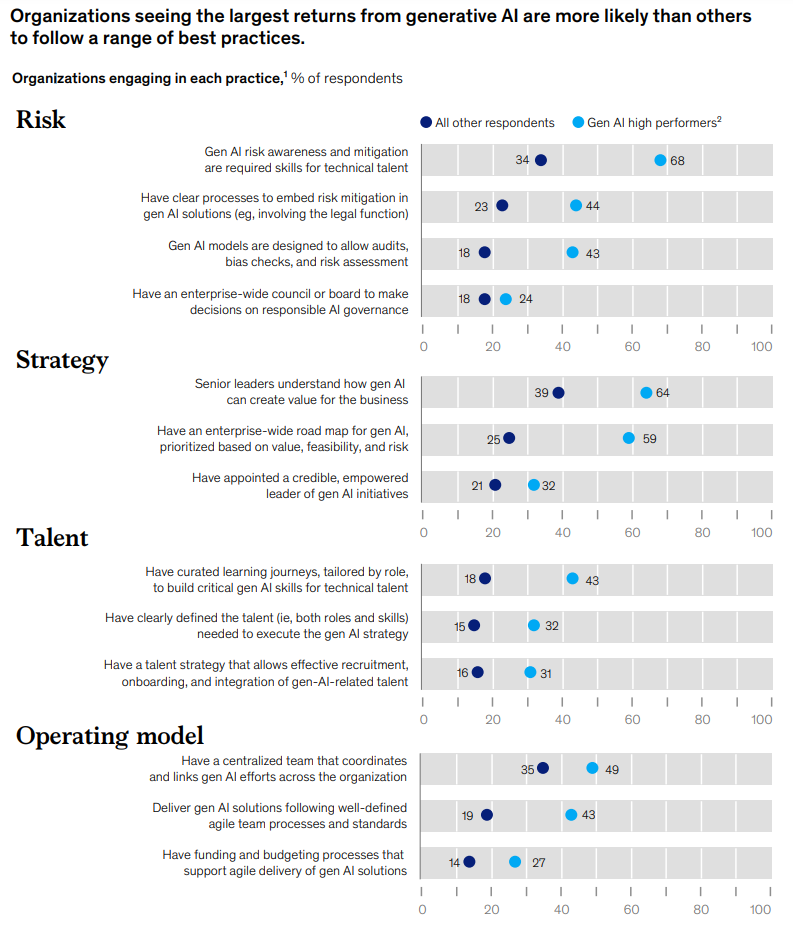

3. Establish Robust Governance

A balanced governance structure is essential to oversee the rapid decision-making process. This structure should adapt existing frameworks whenever possible to minimize disruption while ensuring comprehensive oversight.

Source: McKinsey,2024

4. Embed Governance in Operations

The governance framework should be integrated into the operating model, drawing on expertise across the organization. Training for end users is vital to ensure they understand and can manage the risks associated with generative AI.

5. Building a Responsible AI Culture

The success of generative AI implementation hinges on establishing a responsible AI culture within the organization. This involves several key steps:

Form a Cross-Functional Steering Group: This group, including business, technology, data, privacy, legal, and compliance leaders, should exchange regularly to manage AI risks and make critical decisions.

Develop Responsible AI Guidelines: Establish guiding principles agreed upon by the executive team and the board. These guidelines should address issues like the use of AI in personalized marketing, employment decisions, and the conditions under which AI outputs can be put into production without human review.

Foster Responsible AI Talent and Culture: Train employees at all levels on responsible AI use, ensuring they understand the risks and how to engage with the technology safely.

6. Practical Steps for Risk Mitigation

Mitigating risks in generative AI involves both technical and non-technical measures. For example, in deploying an AI-enabled HR chatbot, organizations might implement various safeguards:

Clarifying User Inputs: The chatbot can ask additional questions to ensure it understands the user's query accurately.

Limiting Data Access: Restrict the chatbot’s access to personal information to prevent misuse.

Providing Citations: Ensure the chatbot cites sources to allow for fact-checking.

7. Balancing Speed and Safety

Using generative AI requires adapting governance structures to meet new demands. Organizations should leverage existing frameworks by expanding their scope to cover AI-specific risks. This includes forming a responsible AI steering group, establishing guidelines, and fostering a culture of responsible AI use.

Generative AI holds the promise of redefining how businesses operate, offering significant productivity gains and innovation opportunities. However, to capture these benefits sustainably, companies must integrate effective risk management from the start.

By following a strategic roadmap and fostering a culture of responsible AI use, organizations can navigate the complexities of generative AI safely and effectively, ensuring they maximize its potential while mitigating risks.